Marketers love a new theory. The latest? That large language models (LLM Search Tools) like ChatGPT and Perplexity are recognizing, rewarding, and even prioritizing “brands” — and if you’re invisible in these results, you must need more PR, more Wikipedia citations, or special schema. Let’s be clear: that’s complete nonsense.

Table of Contents

ToggleWhy Your Brand Isn’t visible in LLMs

You’ve probably sat through pitches or LinkedIn hot takes blaming everything from missing schema markup to a lack of Reddit mentions or PR. But here’s the truth: your brand’s invisibility in ChatGPT or Perplexity search has nothing to do with Schema, LLMS.txt, or classic link-building.

So, why aren’t you there?

Because in the question of Google vs LLMs, its still all about Google. LLMs change your search query behind the scenes. The prompt you test in Google—where you might rank #1—doesn’t get passed through to the LLM engines unchanged. Instead, they fan out a variety of related queries, rewording, extending, or narrowing the original question to fetch broader data. Let’s call this phenomenon your Query Fan Out.

Query Fan Out in SEO

The Query Fan Out is basically the reverse engineering needed to understand LLM visibility.

Instead of just matching “AI SEO tools” directly, an AI might silently branch it into things like “best AI tools for keyword research”, “AI content optimization tools”, and “AI tools for technical SEO”. It then pulls content that answers those smaller questions and combines them into a single response. For SEO, that means you’re no longer just “optimizing for one keyword”. You’re trying to be the best answer to a cluster of closely related questions that can spin out from the original query. The better your topical coverage, the more chances you have to be selected during that fan‑out process.

What is a Query Fan Out

Query fan-out is the process AI search systems use to turn one question into many smaller, related questions so they can build a richer answer. Instead of treating your query as a single string to match against pages, the system decomposes it into subtopics and angles, runs separate lookups for each, and then stitches together the final response. When you type something like “best GEO strategy for SaaS,” the system doesn’t just look for that exact phrase. It also spins off internal questions like “what is GEO,” “GEO for SaaS examples,” “pricing and implementation considerations,” and “risks or limitations,” then pulls evidence for each of those behind the scenes.

This matters because your visibility in AI answers is no longer tied only to the exact query someone types. What really counts is how often your content can serve as a good answer to those hidden sub-queries that fan out from the original prompt. If your page only addresses a very narrow version of the question, it might match the main query but miss most of the internal branches the system actually uses to assemble its answer. On the other hand, content that covers the broader intent space—definitions, comparisons, edge cases, objections, next steps, and adjacent entities—has more chances to be selected as supporting evidence when the model is combining sources.

From a GEO or AI SEO standpoint, “optimizing for query fan-out” basically means designing content around the whole conversation, not just the starting query. A strong page anticipates the obvious follow-up questions, related terms, and alternative framings and either answers them directly on the page or links cleanly to supporting content that does. When AI systems perform their fan-out, those pages are eligible for multiple sub-queries, so they show up more often as citations or as the underlying context for generated answers. In practice, that pushes you to think less in terms of one keyword per URL and more in terms of covering a complete intent cluster that maps to how an AI system will explode and explore the user’s question.

What does QFO Stand for in SEO?

QFO in SEO stands for Query Fan-Out. It describes how AI search systems take one user query and explode it into many related sub‑queries, then use those to find and stitch together an answer.

Query Fan Out Tool

LLM Tips and Tricks

Some of the top tips we have for ranking in LLMs:

- Start a Reddit SEO Campaign not rooted in spamming Reddit

- Use Reddit to research possible LLM prompts

- Create competitive and comparative content

- Dont worry: LLMs don’t read or scan content – just write normally!

Don’t Take Anyone’s Word For It: Get Evidence

Whenever someone offers “expert” opinions on LLM or AI visibility advice, ask them for a concrete example. Push them to screenshare and show, in real time, exactly how a brand shows up for a specific prompt in Perplexity or Gemini. Reality trumps wishful thinking—every single time. And read our post on How AI SEO actually works.

LLM Query Fan Out Expert Video/PodCast

Google Query Fan Out

One of Google’s original explainers on the QFO

How to Quickly Find Your Query Fan Out

Here’s the DIY method—no “AI optimization,” no expensive new tools:

-

Go to Perplexity or Gemini.

-

Enter your prompt:

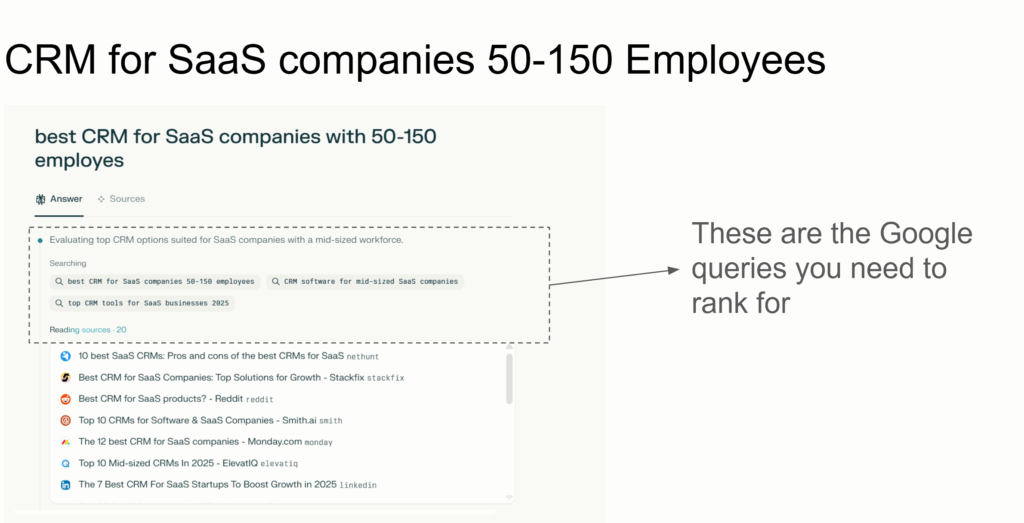

Example: “CRM for SaaS companies 50-150 employees” -

Click the “Steps” tab (or similar).

-

Review all of the queries the LLM spun out in the process.

That’s your Query Fan Out—the true roadmap to LLM visibility.

LLM Visibility = Ranking for Fan Out Queries

You don’t need more PR. You don’t need Schema. You don’t need to wait for some LLM integration on Wikipedia.

You need to rank for the queries generated by the LLM fan out.

What’s Next: Just Do It Yourself

Forget about one-off hacks, Schema tweaks, or PR stunts. Your new SEO goal is simple: discover your Query Fan Out and create content that ranks for those real queries. No waiting, no expensive tools, no intermediaries—just a new, smarter approach to search in the LLM era. It’s time to leave the myths behind and start chasing the queries that actually matter.